The terraform import command allows you to import into HashiCorp Terraform resources that already existed previously in the provider we are working with, in this case AWS. However, it only allows you to import those records one by one, with one run of terraform import at a time. This, apart from being extremely tedious, in some situations becomes impractical. This is the case for the records of a Route53 DNS zone. The task can become unmanageable if we have multiple DNS zones, each one with tens or hundreds of records. In this article I offer you a bash script that will allow you to import in Terraform all the records of a Route53 DNS zone in a matter of seconds or a few minutes.

Script to automatically change all gp2 volumes to gp3 with aws-cli

Last December Amazon announced its new EBS gp3 volumes, which offer better performance and a cost saving of 20% compared to those that have been used until now (gp2). Well, after successfully testing these new volumes with multiple clients, I can do nothing but recommend their use, because they are all advantages and in these 2 and a half months that have passed since the announcement I have not noticed any problems or side effects.

How to automatically update all your AWS EC2 security groups when your dynamic IP changes

One of the biggest annoyances when working with AWS and your Internet connection has a dynamic IP is that when it changes, you immediately stop accessing to all servers and services protected by an EC2 security group whose rules only allow traffic to certain specific IP’s instead of allowing open connections to everyone (0.0.0.0.0/0).

How to perform MySQL/MariaDB backups: mysqldump command examples

Although there are different methods for backing up MySQL and MariaDB databases, the most common and effective one is to use a native tool that both MySQL and MariaDB make available for this purpose: the mysqldump command. As its name suggests, this is a command-line executable program that allows you to perform a complete export (dump) of all the contents of a database or even all the databases in a running MySQL or MariaDB instance. Of course it also allows partial backups, i.e. only some specific tables, or even only only a subset of all the records in a table.

The mysqldump command offers a multitude of different parameters that make it very powerful and flexible. Since having so many options can be confusing, in this post I am going to collect several of the most frequent usage examples with the most common parameters and that are most useful in the day to day life of the system administrator.

ᐈ How to create a user in MySQL/MariaDB and grant permissions on a specific database

How to enlarge the size of an EBS volume in AWS and extend an ext4 partition

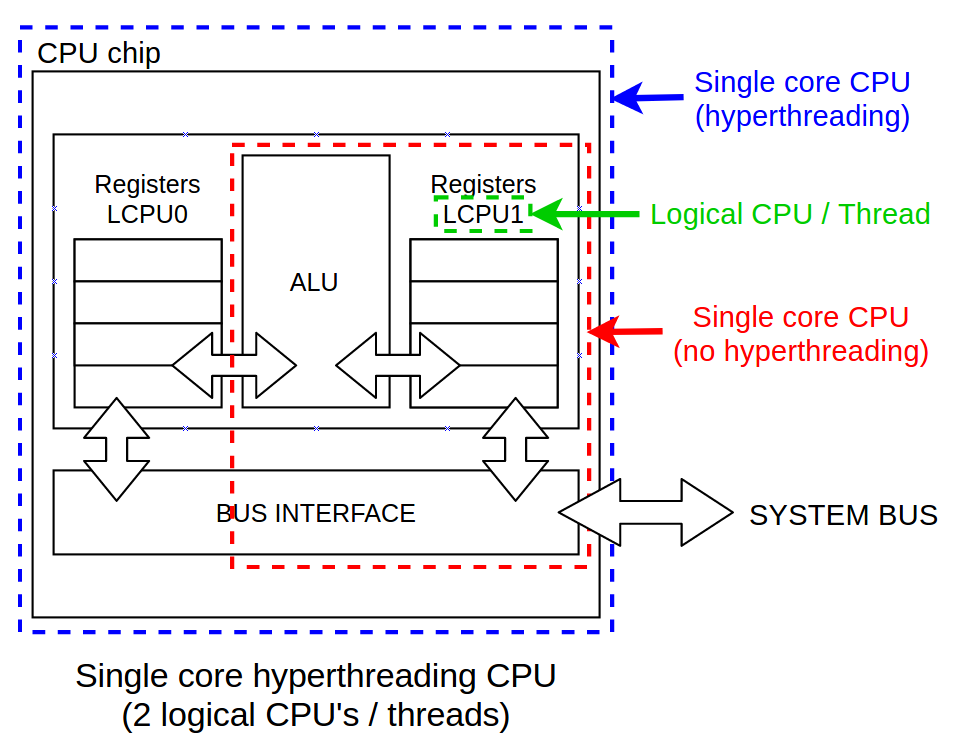

Differences between physical CPU vs logical CPU vs Core vs Thread vs Socket

When we try to know a computer’s architecture and performance at CPU level using Linux commands like nproc or lscpu, we often find out that we are not able to properly interpret their results because we confuse terms such as physical CPU, logical CPU, virtual CPU, core, thread, socket, etc. If we add concepts like HyperThreading (not to be confused with multithreading), we are in a situation where we can not be sure how many cores our box has, we don’t understand why commands like htop indicate that we have 8 cpus when we thought we had bought a single quad-core processor, etc. In short, it’s a mess.